Algolia, You Autocomplete Me

A simple client request quickly spiraled into days of frustrating debugging. A tale of mystery, poor documentation, and one man’s quest to understand “why?”.

A Three Hour Fix

My current project uses the Algolia service to provide a search backend for our data. We combine this with their javascript library autocomplete to create a simple but powerful search experience for our users. Recently we have started development of publicly facing pages for the first time. This led to us taking another pass over our responsive CSS styles to make sure that the application provides a good experience for mobile and tablet visitors. One of the fruits of this analysis was our client noting that mobile search dropdown results look a little clunky and waste white space. With the general direction of “Try and improve the mobile search experience” I set out on what I assumed would be a simple task… Sometimes I’m not a smart person.

We use tailwindcss which amusingly features a testimonial on its homepage from one of Algolia’s engineers. I knew that the tailwind framework we were using provided reasonable built in ways to hide and show different components based on the current window size. My plan was to use this with two different instances of the autocomplete library. One configured for desktop and one for mobile, knowing that tailwind would only ever display one at a time.

Everything started out OK. The initial proof of concept was working and I was able to start making deeper changes between the two flows. However, small problems began to crop up where I didn’t have quite enough control over what autocomplete was rendering. I began to fear that I was going to have to try and sneak a hacky solution, like using javascript to change styles after rendering, through code review. This was less than ideal and I was also getting annoyed that the documentation seemed to have little to do with the code I was actually seeing in our project.

These inconsistencies made me wonder just how far behind our version of autocomplete was. The wonders of source control revealed that we had implemented search around 4 years ago. Since it just kept working, no one had touched it beyond minor styling changes. Looking at our version listing I found we were still using 0.31.0, a pre alpha release, while the product was now at 1.7.1. The difference in versions was far reaching and bundled changes for everything from the entry point to create an autocomplete plugin, to the allowed formats for display templates.

Looking over the changes, I did find some compelling new features. It offered special handling for mobile experiences; namely a detachedMode which simulates native full screen search functionality on mobile devices. As a bonus it also gave us better access to the rendered results for styling. Feeling like this was the best choice in the long term, I committed to upgrading our version of autocomplete.

Highlighting Your Problems

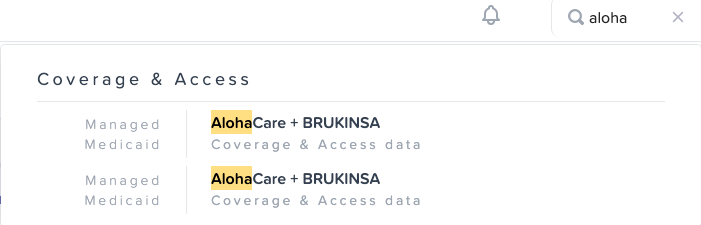

I started by gutting our mobile search and recreating it in the new version. This would let me keep the desktop version to benchmark against while guaranteeing that I could create a good mobile experience first. It was smooth sailing and at the end of the day I had almost our entire existing experience recreated… Except for one little hiccup. We use result highlighting to show what parts of the result match the query the user typed in. The desired effect is something like this:

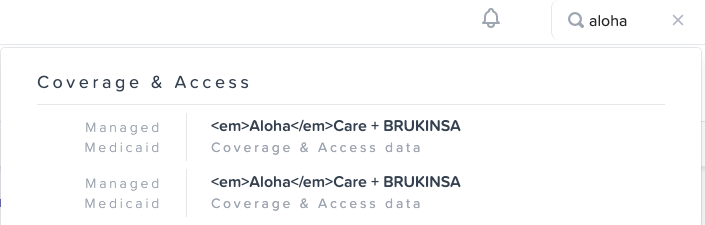

However our results were now rendering like this:

Ok fine, lets pull up the docs for Algolia and make sure we’re using the highlight component correctly. Here is the provided sample code and documentation.

Templates expose a set of built-in components to handle highlighting

and snippeting.

templates: {

item({ item, components, html }) {

return html`<div>

<a href="${item.url}">

${components.Highlight({

hit: item,

attribute: 'name',

tagName: 'em',

})}

</a>

</div>`;

}

}Everything looked correct, but upon inspecting the rendered HTML I found that our output was actually <em>Aloha</em>. It seemed like something was escaping the em tags that are being added by the highlight component! This would prevent them rendering as HTML and thus being styled appropriately. Feeling like I had a good lead I began going down rabbit holes.

Was it a difference between tagged template strings and the previous implementation that exported a plain string? Perhaps it was an issue with our version of Preact. Would switching to JSX instead of template strings solve the issue? Maybe using the interpolation form instead of the function call to the highlight component? At one point I even edited our template and data into a working autocomplete sandbox to see if I could recreate the problem, but without success.

I pored over these working examples looking for differences, one of the smaller things I noticed was we had written our own function for querying the Algolia backend instead of using the provided getAlgoliaResults helper. Worried, I pulled up the documentation for it.

the getAlgoliaResults function lets you query several Algolia indices

at once.

I was hesitant to replace our custom Algolia call since I would have to recreate a fair amount of logic. This documentation was great news though; it indicates that the helper is designed to facilitate calling out to multiple indexes at the same time and collating their results. Obviously since we don’t need either of those features we’re safe not using it.

After several days of fruitless experiments I was back retracing my steps and going over all my assumptions. This meant digging into the actual implementation of the highlight component as well as looking at the network requests in our project vs the working sandboxes. It was here that I finally realized what was going wrong. Deep in the internals of highlighting I found a parseAttribute function that was used for splitting the string apart around the section to highlight.

export function parseAttribute({

highlightedValue,

}: ParseAttributeParams): ParsedAttribute[] {

const preTagParts = highlightedValue.split(HIGHLIGHT_PRE_TAG);

const firstValue = preTagParts.shift();

const parts = createAttributeSet(

firstValue ? [{ value: firstValue, isHighlighted: false }] : []

);

preTagParts.forEach((part) => {

const postTagParts = part.split(HIGHLIGHT_POST_TAG);

// snip

});Ah ha! It wasn’t splitting on em tags that Algolia defaults to marking highlighted results with, which was what I expected. It was splitting on these constants instead:

export const HIGHLIGHT_PRE_TAG = '__aa-highlight__';

export const HIGHLIGHT_POST_TAG = '__/aa-highlight__';Checking the responses in the sandbox, I could see that the highlight results from their Algolia backend were in fact coming in with these custom tags instead of em. Finding usages on this pair of constants turned up a fetchAlgoliaResults method that was being wrapped by our friend getAlgoliaResults mentioned earlier. And what does this little guy do?

params: {

highlightPreTag: HIGHLIGHT_PRE_TAG,

highlightPostTag: HIGHLIGHT_POST_TAG,

...params,

}This method was adding in a pair of custom parameters to any search params passed into it; even if you specified your own highlight tags this would be replacing them behind the scenes. getAlgoliaResults was making sure the custom tags would be present as they are now required by the rest of the autocomplete toolchain. Adding in these settings to our own search parameters caused everything to start working.

Honestly I was too relieved to even be properly frustrated at this point, that would come later. I had to know, had I just missed this in my countless trips through the docs? I went over all the documentation again but found nothing even hinting at changes to the search params or their importance. I created an issue on their GitHub and was able to get them to update the section on highlighting with templates.

You can use these components to highlight or snippet Algolia results

returned from the getAlgoliaResults function.

As well as a mention in the getAlgoliaResults description.

Using getAlgoliaResults lets Autocomplete batch all queries using the

same search client into a single network call, and thus minimize search

unit consumption. It also works out of the box with the components

exposed in templates.

While I still think this might undersell the relationship between these components, it is a much stronger message about the importance of getAlgoliaResults.

Reverse Demo Luck

Pleased with my discovery I quickly finished up replicating the rest of our old functionality in the new version. I put it together just in time to show off the mobile version in our weekly demo to the client and everything went flawlessly; I should have known this was a warning. After the demo I took our changes and started applying them to the desktop version of the site.

And… Everything broke. Functionality I was certain had been working during the demo, like on focus behavior, stopped working. I settled in for another round of debugging, systematically reversing changes to the desktop view trying to find the culprit. Eventually it became clear that I was not going to find what was wrong on our end and I went back to GitHub for autocomplete.

One thing that I knew was broken were events that triggered on the search bar gaining or losing focus. Using that as a starting point I set about exploring the code base looking for things related to focus changes and event handling. It didn’t take long before I found myself in the handler for touch and move events where a code comment and method caught my eye.

// @TODO: support cases where there are multiple Autocomplete instances.

// Right now, a second instance makes this computation return false.

const isTargetWithinAutocomplete = [formElement, panelElement].some(

(contextNode) => {

return isOrContainsNode(contextNode, event.target as Node);

}

);Well… damn. The tool loses the ability to tell if a given item being interacted with is part of the autocomplete panel or not if multiple autocomplete implementations exist on the same page. This manifests as a partial breakdown of certain behavior but most of the tool keeps working. This rather large limitation of the software is not mentioned in documentation outside of a low level code comment.

I was able to address this issue by fully disabling the unused search bar based on what screen size the application was opened in. If someone switched viewing experiences while the page was open search will not work until they refresh the page. This compromise was acceptable as it seemed like an unlikely scenario and it keeps us from having to listen to resize events.

While I now get to call this “quick” fix done, this was still one of my more frustrating experiences. It certainly has me reflecting on the quality of my own documentation. One root cause for both of these issues was that even when you are writing your code to be modular we often still forget that come documentation time. Whether it’s treating your components as if they’ll only ever interact with your own solutions, or not mentioning a critical limit of your library.

I’ve been working through some foundational software engineering texts with a co-worker I’m mentoring. I stumbled upon this prophetic section recently and I’m not above an appeal to authority.

If you can't figure out how to use a class based solely on its interface

documentation, the right response is not to pull up the source code and

look at the implementation. That's good initiative but bad judgment. The

right response is to contact the author of the class and say

"I can't figure out how to use this class."

Code Complete: Second Edition (Steve McConnell 2004)

The book goes on to expound on how the correct response isn’t to explain the class, but to instead improve the documentation and keep doing that until the consumer is satisfied. This was written for working on a project with co-workers; where you have that ability to reach out and request improvements and help. Open source development does not have these same relationships built-in. Raising an issue is just as likely to have you screaming into the void as it is to get a meaningful or helpful response.

To that end I’d argue we need to be even more dedicated to ensuring our artifacts for public consumption are documentented in a thoughtful manner. Doing that effectively requires diligent effort at recording our assumptions and learning to look at our code from the outside. Be the change you want to see in the documentation.